All,

I think now is a good opportunity to return to methodology and the pitfalls of score merging.

In this study, each of nine strong minded experts were asked to evaluate 12 binoculars using 29 7-point Likert ratings. The numbers on such scales are

ordinal, i.e., they reflect direction, but not distance. In other words, we have no way to know whether the psychological distance between 1 and 2 is the same as the distance between 3 and 4, or 6 and 7. And, in fact, the underlying psychological distances may be assumed to vary between observers. If we assume each observer is self-consistent, however, we can add such ratings within his own questionnaire, but not between observers.

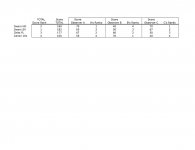

To illustrate what can happen, in the attached table I've constructed a simplified set of ratings from three hypothetical observers. If we assume the score totals were obtained from 10 7-point Likert ratings, the max possible score for a binocular is 10x7 = 70, and the min. possible score is 10x1 = 10. I've given the binoculars real names just to keep it interesting.

As we can see, the individual rankings of our three experts is different, although A and C probably come from a similar opinion pool, and B is obviously a contrarian. But now see what happens to the total ranking when their scores are totaled. (I did this purposely

) Lo and behold, the Swaro SV comes out number 1, while none of the individual observers actually rated it first. And, the Swaro HD, which was #1 in the eyes of experts A and C, now drops into the dust bin of history because it "lost."

Essentially, that's why I've asked the authors of the article to unscramble the egg and let us see what the individual ratings are. Trying to make sense of aggregates could be bad for your brain, to say nothing about the reputation of the binocuars. :eek!:

Ed

PS. I've added a .pdf attachment to make it easier.