beardedbeard

New member

Hi there folks. I myself am not a nature photographer, though I must admit that going through some of the posts in your forum I might just as well start such a hobby. I came here for a specific reason which I'll describe in a moment, but I'll admit that I found myself for quite some time just hopping between posts enjoying the scenery

I was looking for some digiscoping resources, and I think that here I'd find some experienced answers for some basic questions. I have a project I'm doing for long-distance digital real-time monitoring.

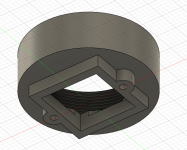

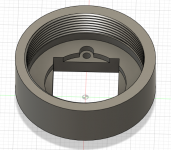

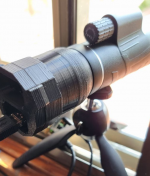

The setup is pretty straight forward. Raspberry pi (running on battery), inside a 3D printed custom housing which also houses a USB camera (based on IMX291 sensor). The 3d printer housing has threads around the lens mount - so I could attach adapters to it so it fits either a monocular or a spotting scope. An example of the rig is in the next picture (in which the pi is disengaged from the camera house as these are two separate pieces).

I am reading / learning about optics, and I enrich my understanding by trail and error. At the moment, there are several issues I can't really understand and I would appreciate if your experience could help me with understanding the science behind it.

Issue #1 - Non-Uniform focus across an image

Since the images I take are going through several optical devices, I understand I can't get a win-win situation and there is somewhat compromises on quality. Also, I understand that the quality of the monocular is of high importance.

However, while using any type of lens on the pi (6mm, 8mm, 12mm and 16mm of either M12, M16 or CS-MOUNT) - the images I get on this monocular (or a "bresser" one) have a non uni-form focus across the image. At first I thought its due to some distortions - hence I tried all sort of combinations (lens type, lens focal point and two monoculars). Althought the focus non-uniformity effects are changing - they are still very much there - and I think its safe to say 25-40 % of the image (in different places) isn't properly focused. Here is just one example of such an outcome (circled in red are the most focused areas):

I think that in this example - what is "somewhat obvious" - is that the non-uniformity is symmetrical. So I think it has something to do with the fact that I'm using a camera lens (this time, 12mm M12) to grab an already optically "enhanced" image from the monocular lenses. Can anyone help me with:

1) Identify this optical behavior. Does it have a name? (non-uniform focus is just a brainf*rt I had)

2) How can I identify this behavior ground rules. That is, how can I know its limits (so I have it effecting me less by choosing the right combination of lens type, focal length [and of course if needed, camera sensor size]? I tried to get my way around with optical calculators (and visualizers) but I'm not sure I'm heading the right way.

My aim is to limit this effect to the maximum. By the way, with other focal lengths (8mm for example) I obviously get less zoom - but the boundaries of effect are pretty much the same.

Issue 2 - Getting most of such a rig for Digital Scoping

1) The camera is manually focused. So, are there any specific "guidelines" on what focus should be set on the camera lens before attaching it to the monocular/spotting scope? I'm not sure I found any rules of thumbs here. Focus the lens at distance of the monocular eyepiece? or focus the lens on the horizon? It does make me change the focus on the monocular to adapt, of course, but I was wonder if aside from that, will it impact quality / the non-uniform focus issue listed above?

2) In case I'm limited to the rig setup above - are there any optical guidelines regarding the camera lens focal length choices? (again, assuming im already losing quality because of all the optics involved). Would it be wiser to choose specific focal length (6mm, 8mm) due to particular optical effect (such as less possible distortion), or am I not even in the right direction asking such a question?

In fact I got other issues - but to be honest, I barged in here with no beautiful animals with awesome blurry field of view effect - so I'll cut short my questions hoping you guys would take the time to read through it all

Thanks in advance..

I was looking for some digiscoping resources, and I think that here I'd find some experienced answers for some basic questions. I have a project I'm doing for long-distance digital real-time monitoring.

The setup is pretty straight forward. Raspberry pi (running on battery), inside a 3D printed custom housing which also houses a USB camera (based on IMX291 sensor). The 3d printer housing has threads around the lens mount - so I could attach adapters to it so it fits either a monocular or a spotting scope. An example of the rig is in the next picture (in which the pi is disengaged from the camera house as these are two separate pieces).

I am reading / learning about optics, and I enrich my understanding by trail and error. At the moment, there are several issues I can't really understand and I would appreciate if your experience could help me with understanding the science behind it.

Issue #1 - Non-Uniform focus across an image

Since the images I take are going through several optical devices, I understand I can't get a win-win situation and there is somewhat compromises on quality. Also, I understand that the quality of the monocular is of high importance.

However, while using any type of lens on the pi (6mm, 8mm, 12mm and 16mm of either M12, M16 or CS-MOUNT) - the images I get on this monocular (or a "bresser" one) have a non uni-form focus across the image. At first I thought its due to some distortions - hence I tried all sort of combinations (lens type, lens focal point and two monoculars). Althought the focus non-uniformity effects are changing - they are still very much there - and I think its safe to say 25-40 % of the image (in different places) isn't properly focused. Here is just one example of such an outcome (circled in red are the most focused areas):

I think that in this example - what is "somewhat obvious" - is that the non-uniformity is symmetrical. So I think it has something to do with the fact that I'm using a camera lens (this time, 12mm M12) to grab an already optically "enhanced" image from the monocular lenses. Can anyone help me with:

1) Identify this optical behavior. Does it have a name? (non-uniform focus is just a brainf*rt I had)

2) How can I identify this behavior ground rules. That is, how can I know its limits (so I have it effecting me less by choosing the right combination of lens type, focal length [and of course if needed, camera sensor size]? I tried to get my way around with optical calculators (and visualizers) but I'm not sure I'm heading the right way.

My aim is to limit this effect to the maximum. By the way, with other focal lengths (8mm for example) I obviously get less zoom - but the boundaries of effect are pretty much the same.

Issue 2 - Getting most of such a rig for Digital Scoping

1) The camera is manually focused. So, are there any specific "guidelines" on what focus should be set on the camera lens before attaching it to the monocular/spotting scope? I'm not sure I found any rules of thumbs here. Focus the lens at distance of the monocular eyepiece? or focus the lens on the horizon? It does make me change the focus on the monocular to adapt, of course, but I was wonder if aside from that, will it impact quality / the non-uniform focus issue listed above?

2) In case I'm limited to the rig setup above - are there any optical guidelines regarding the camera lens focal length choices? (again, assuming im already losing quality because of all the optics involved). Would it be wiser to choose specific focal length (6mm, 8mm) due to particular optical effect (such as less possible distortion), or am I not even in the right direction asking such a question?

In fact I got other issues - but to be honest, I barged in here with no beautiful animals with awesome blurry field of view effect - so I'll cut short my questions hoping you guys would take the time to read through it all

Thanks in advance..